Singular Value Decomposition (SVD)

@(LinearAlgebra)

SVD

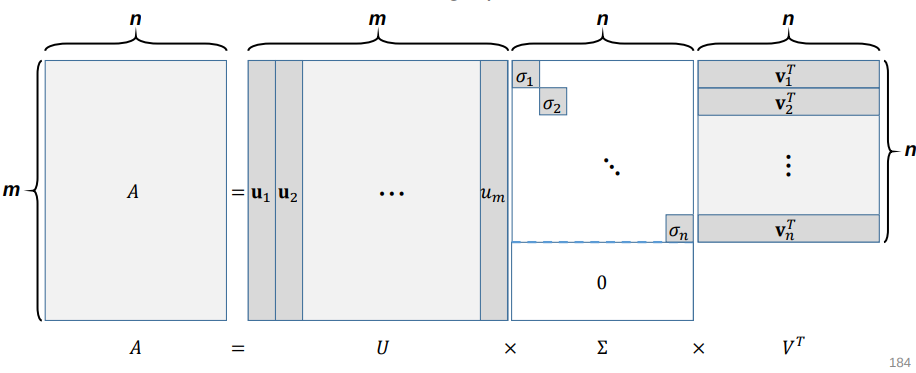

Given a rectangular matrix , its singular value decomposition is written as

where

- , : matrices with orthonormal columns, providing an orthonormal basis of Col and Row , respectively.

- : a diagonal matrix whose entries are in a decreasing order, i.e.,

Basic Form of SVD

Given a rectangular matrix where , SVD gives

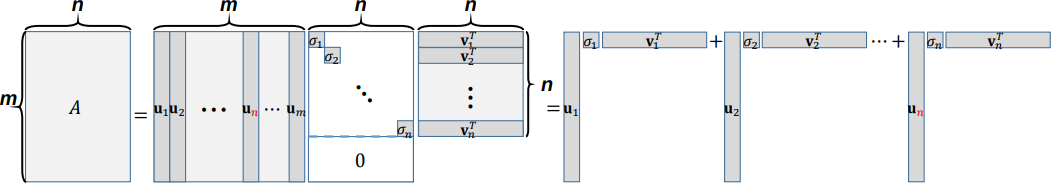

SVD as Sum of Rand 1 Outer Products

can also be represented as the sum of outer products

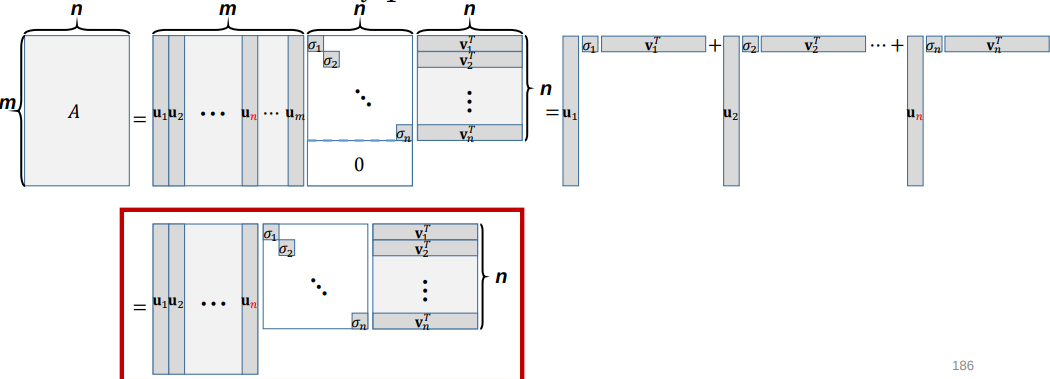

Reduced Form of SVD

can also be represented as the sum of outer products

Another Perspective of SVD

- We can easily find two orthonormal basis sets, for Col and for Row , by using, say, Gram–Schmidt orthogonalization.

- Are these unique orthonormal basis sets?

No. Then, can we jointly find them such that

Let us denote , , and

- Consider and

- since has orthonormal columns.

- Thus, .

Computing SVD

- First, we form and and compute eigendecomposition of each:

- Can we find the following?

- Orthogonal eigenvector matrices and

- Eigenvalues in that are all positive

- Eigenvalues in that are shared by and

- Yes, since and are symmetric positive (semi-)definite.

Symmetric Positive Definite Matrices and Spectral Decomposition

• If is symmetric and positive-definite, then the spectral decomposition will have all positive eigvenvalues:

where .

and are symmetric positive (semi-)definite!

Symmetric:

Positive (semi-)definite

Thus, we can find

- Orthogonal eigenvector matrices and .

- Eigenvalues in that are all positive

Things to Note

- Given any rectangular matrix , its SVD always exists.

- Given a square matrix , its eigendecomposition does not always exist, but its SVD always exists.

- Given a square, symmetric positive (semi-)definite matrix , its eigendecomposition always exists, and it is actually the same as its SVD.