Symmetric Matrices and Quadratic Forms

7.1 DIAGONALIZATION OF SYMMETRIC MATRICES

A symmetric matrix is a matrix such that . Such a matrix is necessarily square. Its main diagonal entries are arbitrary, but its other entries occur in pairs—on opposite sides of the main diagonal.

EXAMPLE 2

This example treats a matrix whose eigenvalues are all distinct.

If possible, diagonalize the matrix .

Solution

The characteristic equation of is

Standard calculations produce a basis for each eigenspace:

These three vectors form a basis for . In fact, it is easy to check that is an orthogonal basis for .

Experience from Chapter 6 suggests that an orthonormal basis might be useful for calculations, so here are the normalized (unit) eigenvectors.

Let

Then , as usual. But this time, Since is square and has orthonormal columns, is an orthogonal matrix, and is simply (See Section 6.2).

Theorem 1 explains why the eigenvectors in Example 2 are orthogonal—they correspond to distinct eigenvalues.

Theorem 1

If is symmetric, then any two eigenvectors from different eigenspaces are orthogonal.

Proof

Let and be eigenvectors that correspond to distinct eigenvalues, say, and . To show that , compute

Hence . But , so

An matrix is said to be orthogonally diagonalizable if there are an orthogonal matrix (with ) and a diagonal matrix such that

Such a diagonalization requires linearly independent and orthonormal eigenvectors. When is this possible? If is orthogonally diagonalizable as in (1), then

Thus is symmetric! Theorem 2 below shows that, conversely, every symmetric matrix is orthogonally diagonalizable. The proof is much harder and is omitted; the main idea for a proof will be given after Theorem 3.

Theorem 2

An matrix is orthogonally diagonalizable if and only if is a symmetric matrix.

This theorem is rather amazing, because the work in Chapter 5 would suggest that it is usually impossible to tell when a matrix is diagonalizable. But this is not the case for symmetric matrices.

EXAMPLE 3

This example treats a matrix whose eigenvalues are not all distinct.

Orthogonally diagonalize the matrix , whose characteristic equation is

Solution

The usual calculations produce bases for the eigenspaces:

Although and are linearly independent, they are not orthogonal. Recall from Section 6.2 that the projection of onto is , and the component of orthogonal to is

Then is an orthogonal set in the eigenspace for . (Note that is a linear combination of the eigenvectors and , so is in the eigenspace. This construction of is just the Gram–Schmidt process of Section 6.4.) Since the eigenspace is two-dimensional (with basis , , the orthogonal set is an orthogonal basis for the eigen space, by the Basis Theorem. (See Section 2.9 or 4.5)

Normalize and to obtain the following orthonormal basis for the eigenspace for :

An orthonormal basis for the eigenspace for is

By Theorem 1, is orthogonal to the other eigenvectors and . Hence is an orthonormal set. Let

Then orthogonally diagonalizes , and .

In Example 3, the eigenvalue 7 has multiplicity two and the eigenspace is two-dimensional. This fact is not accidental, as the next theorem shows.

Theorem 3 : The Spectral Theorem

The set of eigenvalues of a matrix A is sometimes called the spectrum of A , and the following description of the eigenvalues is called a spectral theorem.

The Spectral Theorem for Symmetric Matrices

An symmetric matrix has the following properties:

a. has real eigenvalues, counting multiplicities.

b. The dimension of the eigenspace for each eigenvalue equals the multiplicity of as a root of the characteristic equation.

c. The eigenspaces are mutually orthogonal, in the sense that eigenvectors corresponding to different eigenvalues are orthogonal. d. is orthogonally diagonalizable.

- Part (a) follows from Exercise 24 in Section 5.5.

- Part (b) follows easily from part (d). (See Exercise 31.)

- Part (c) is Theorem 1.

- Because of (a), a proof of (d) can be given using Exercise 32 and the Schur factorization discussed in Supplementary Exercise 16 in Chapter 6.

- The details are omitted.

Spectral Decomposition

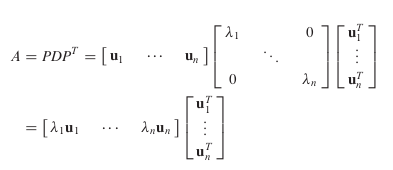

Suppose , where the columns of are orthonormal eigenvectors of and the corresponding eigenvalues are in the diagonal matrix . Then, since ,

Using the column–row expansion of a product (Theorem 10 in Section 2.4), we can write

This representation of is called a spectral decomposition of A because it breaks up into pieces determined by the spectrum (eigenvalues) of .

- Each term in (2) is an matrix of rank 1(번역본에서 계수 1).

- For example, every column of is a multiple of .

- Furthermore, each matrix is a projection matrix in the sense that for each in , the vector is the orthogonal projection(번역본에서 전사 이나 투영 이 개인적으로 나은듯) of onto the subspace spanned by .

EXAMPLE 4

Construct a spectral decomposition of the matrix that has the orthogonal diagonalization

Solution

Denote the column of by and . Then

To verify this decomposition of , compute

and