Ch05. Eigenvectors and Eigenvalues

5.1 Eigenvectors and Eigenvalues

We will study special vectors which are transformed by into scalar multiples of themselves.

Definition

- An eigenvector of an matrix is a nonzero vector such that for some scalar .

- A scalar is called an eigenvalue of if there is a nontrivial solution of ; such an is called an eigenvector corresponding to .

is an eigenvalue of an matrix if and only if the equation has a nontrivial solution ().

- The set of all solutions of (3) is just the null space of the matrix .

- So this set is

- a subspace of and

- is called the eigenspace of corresponding to .

- The eigenspace consists of the zero vector and all the eigenvectors corresponding to .

- Eigen vector

- Eigen value

- Eigen space!

Example 3

Show that 7 is an eigenvalue of matrix and find the corresponding eigenveoctors.

Solution:

The scalar 7 is an eigenvalue of if and only if the equation has a nontrivial solution.

- But (1) is equivalent to , or

- To solve this homogeneous equation, form the matrix

- The columns of are obviously linearly dependent, so (2) has nontrivial solutions.

To find the corresponding eigenvectors, use row operations:

- The general solution has the form .

- Each vector of this form with is an eigenvector corresponding to .

Example 4

Let . An eigenvalue of is 2. Find a basis for the corresponding eigenspace.

Solution:

Form and row reduce the augmented matrix for .

- At this point, it is clear that 2 is indeed an eigenvalue of because the equation has free variables.

- The general solution is , free.

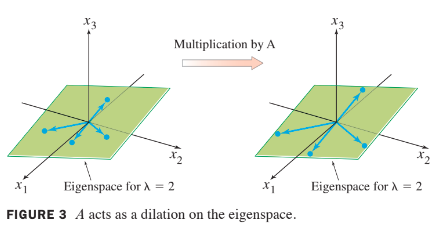

The eigenspace, shown in the following figure, is a two-dimensional subspace of . A basis is

Example 4 shows a good method for manual computation of eigenvectors in simple cases when an eigenvalue is known. Using a matrix program and row reduction to find an eigenspace (for a specified eigenvalue) usually works, too, but this is not entirely reliable. Roundoff error can lead occasionally to a reduced echelon form with the wrong number of pivots. The best computer programs compute approximations for eigenvalues and eigenvectors simultaneously, to any desired degree of accuracy, for matrices that are not too large. The size of matrices that can be analyzed increases each year as computing power and software improve.

The following theorem describes one of the few special cases in which eigenvalues can be found precisely.

Theorem 1

The eigenvalues of a triangular matrix are the entries on its main diagonal.

Proof

For simplicity, consider the case.

- If is upper tirangular, the ahs the form

- The scalar is an eigenvalue of if and only if the equation has a nontrivial solution, that is, if and only if the equation has a free variable.

- Because of the zero entries in , it is easy to see that has a free variable if and only if at least one of the entries on the diagonal of is zero.

- This happens if and only if equals one of the entries in .

Theorem 2

If are eigenvectors that correspond to distinct eigenvalues of an matrix , then the set is linearly independent.

Proof

Suppose is linearly dependent.

- Since is nonzero, Theorem 7 in Section 1.7 says that one of the vectors in the set is a linear combination of the preceding vectors.

- Let be the least index such that is a linear combination of the preceding (linearly independent) vectors.

- Then there exist scalars such that

- Multiplying both sides of (5) by and using the fact that for each , we obtain

- Multiplying both sides of (5) by and subtracting the result from (6), we have

- Since is linearly independent, the weidhts in (7) are all zero.

- But none of the factors are zero, because the eigenvalues are distinct.

- Hence for .

- But then (5) says that , which is impossible.

- Hence cannot be linearly dependent and therefore must be linearly independent.

Eigenvectors and Difference Equations

There is a difference equation

- If is an matrix, then (8) is a recursive description of a sequence in .

- A solution of (8) is an explicit description of whose formula for each does not depend directly on or on the preceding terms in the sequence other than the initial term .

- The simplest way to build a solution of (8) is to take an eigenvector and its corresponding eigenvalue and let

- This sequence is a solution because