Ch 07 Symmetric Matrices and Quadratic Forms

7.4 The Singular Value Decomposition (1)

The diagonalization theorems in Sections 5.3 and 7.1 play a part in many interesting applications. Unfortunately, as we know, not all matrices can be factored as with D diagonal. However, a factorization is possible for any matrix A! A special factorization of this type, called the singular value decomposition, is one of the most useful matrix factorizations in applied linear algebra.

The singular value decomposition is based on the following property of the ordinary diagonalization that can be imitated for rectangular matrices: The absolute values of the eigenvalues of a symmetric matrix measure the amounts that stretches or shrinks certain vectors (the eigenvectors).

If and , then

If is the eigenvalue with the greatest magnitude, then a corresponding unit eigenvector identifies a direction in which the stretching effect of is greatest. That is, the length of is maximized when , and , by (1). This description of and has an analogue for rectangular matrices that will lead to the singular value decomposition.

Example 1

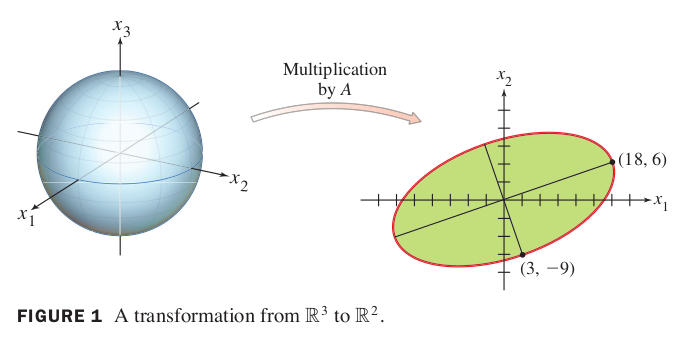

If , then the linear transformation maps the unit sphere in onto an ellipse in , shown figure 1. Find a unit vector at which the length is maximized, and compute this maximum length.

Solution of Example 1

Example 1 suggests that the effect of on the unit sphere in is related to the quadratic form . In fact, the entire geometric behavior of the transformation is captured by this quadratic form, as we shall see.

The Singular Values of an by Matrix

Let be an matrix. Then is symmetric and can be orthogonally diagonlized. Let be an orthonormal basis for consisting of eigenvectors of , and let be the associated eigenvalue of . Then, for ,

So the eigenvalues fo are all nonnegative. By renumbering, if necessary, we may assume that the eigenvalues are arranged so that

The singular values of are the square roots of the eigenvalues of , denoted by , and they are arranged in decreaing order. That is, for .

By equation (2), *the singular values of are the lengths of the vectors .

Theorem 9

Suppose is an orthonormal basis of consisting of eigenvectors of , arranged so that corresponding eigenvalues of satisfy , and suppose has nonzero singular values. Then is an orthogonal basis for , and

Proof of Theorem 9

Because and are orthogonal for ,

Thus is an orthogonal set. Furthermore, since the length of the voectors are the singular values of , and since there are nonzero singular vectors, if and only if . So are linearly independent vectors, and they are in . Finally, for any in —say,—we can write , and

Thus is in Span , which shows that is an (orthogonal) basis for . Hence .

Theorem 10: The Singular Value Decomposition

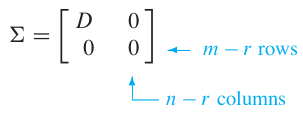

he decomposition of involves an “diagonal” matrix of the form

where is an diagonal matrix for some not excedding the smaller of and . (If equals or or both, some or all of the zero matrices do not appear.)

Let be an matrix with rank . Then there exists an matrix as in (3) for which the diagonal entries in are the first singular values of , , and there exists an orthogonal matrix and an orthogonal matrix such that

Any factorization , with and orthogonal, as in (3), and positive diagonal entries in , is called a singular value decomposition (or SVD) of .

The columns of in such a decomposition are called left singular vectors of , and the columns of are called right singular vectors of .

Proof of SVD

Let and be as in Theorem 9, so that is an orthogonal basis for .

Normalize each to obtain an orthonormal basis , where

And

Now extend to an orthonormal basis of , and let

and

By construction, and are orthogonal matrices. Also, from (4),

Let be the diagonal matrix with diagonal entries , and let be as in (3) above. Then

![e1][./fig/la_07_04_e1.png]

Since is an orhtogonal matrix, .

The next two examples focus attention on the internal structure of a singular value decomposition. An efficient and numerically stable algorithm for this decomposition would use a different approach.